Il/legal War

Expanding the Frame of Meaningful Human Control from Military Operations to Democratic Governance. A guest essay by Lucy Suchman

It might seem hopelessly naive, in the current moment, to reiterate the premise that wars conducted by nation states are initiated in the name of ensuring the security of their citizens. Territorial expansion, control over resources, and the suppression or elimination of populations categorized as the enemy are the dominant bases for ongoing military operations around the world. While acknowledging these realities, the demilitarization of international relations requires, among other things, reinforcement and reconstruction of the legal and political institutions available for holding nation states accountable to the law and to their citizenry.

Since the conclusion of the Second World War, a framework of international law has provided the basis for adjudicating the legality of military operations undertaken by states in the name of the security of their citizens. At the foundation of International Humanitarian Law (IHL) is the Principle of Distinction, which states:

‘Rule 1. The parties to the conflict must at all times distinguish between civilians and combatants. Attacks may only be directed against combatants. Attacks must not be directed against civilians.’1

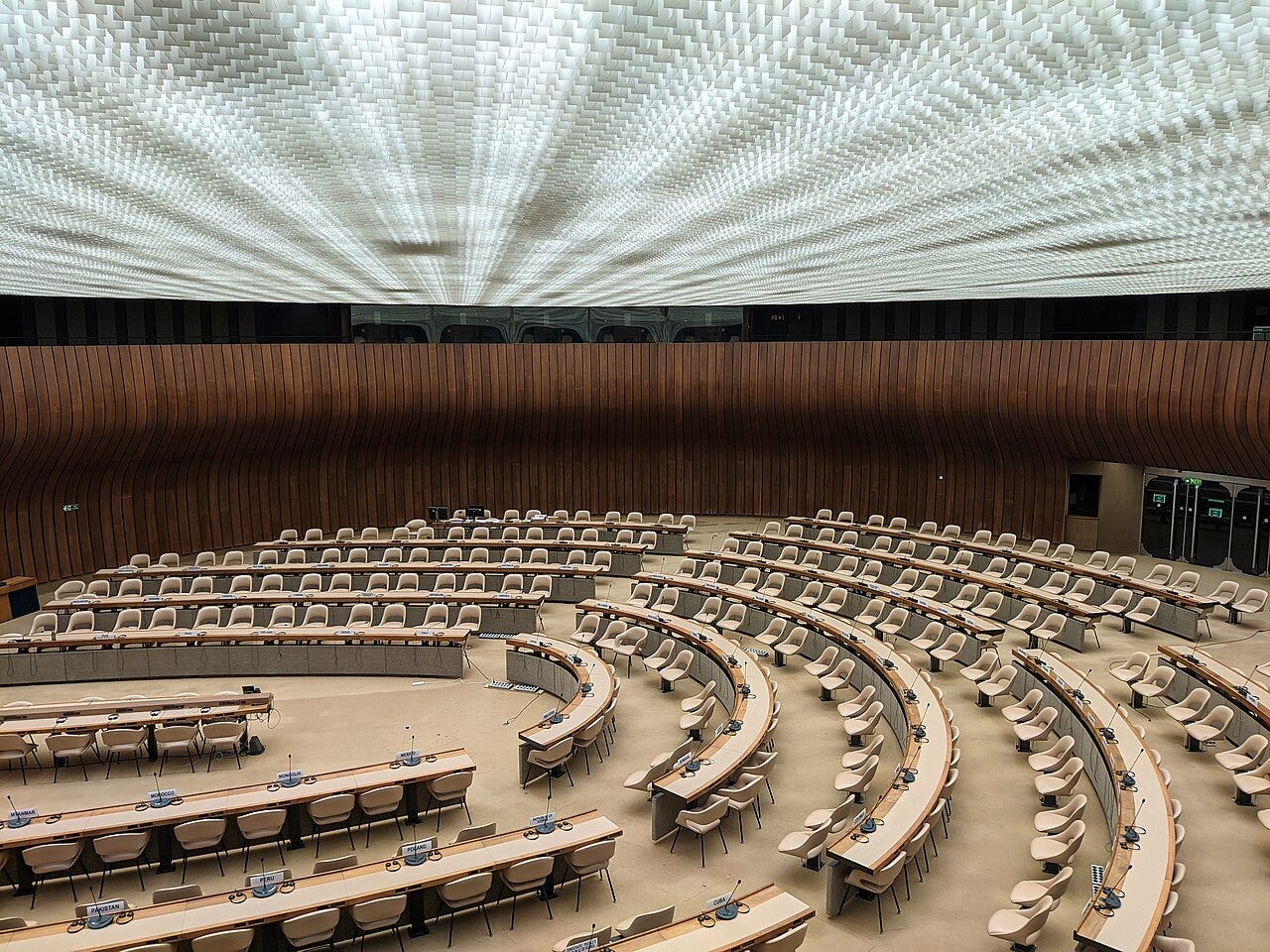

As a member of the International Committee for Robot Arms Control, I took this principle as a foundation for my testimony at a 2016 meeting of experts at the UN Convention on Certain Conventional Weapons (CCW) on the question of autonomy in weapon systems (Suchman 2016). I spoke as an anthropologist engaged for over three decades with the fields of artificial intelligence (AI) and human-computer interaction, and as a founding member, in the 1980s, of the group Computer Professionals for Social Responsibility. Our focus then was to provide critical analysis of US Department of Defense plans for computer control of nuclear weapon systems, and associated initiatives in AI-enabled warfighting.2

The impossibility of designing autonomous weapons adherent to IHL

Within the context of UN deliberations, autonomous weapon systems (AWS) have been defined as systems in which the critical functions of target selection and the initiation of violent force have been fully automated (ICRC 2018, Asaro 2019). The crucial question for AWS that would be adherent to IHL is whether the discriminatory capacities that are the precondition for legal killing can be reliably encoded. My argument is that they cannot be.

Machine autonomy presupposes the unambiguous specification by human designers of the conditions under which associated actions should be taken. In the case of autonomous weapons, adherence to the Principle of Distinction would require that weapon systems have adequate sensors and associated processing algorithms to separate combatants from civilians, and to differentiate wounded or surrendering combatants from those who pose an imminent threat. Existing technologies such as infrared temperature sensors, cameras, and associated image processing algorithms may be able to identify someone as a human, even to associate that human image with a name, but they cannot make the discriminations among persons that are required by the Principle of Distinction. There is no definition of a civilian that can be translated into a recognition algorithm.

More fundamentally it is impossible to encode the laws of war, or any laws, computationally because all laws presuppose – rather than state explicitly – the capacities required to assess their applicability to a specific situation.

As normative prescriptions, legal constraints assume judgements regarding their appropriate application that they do not, and could never, fully specify. Insofar as legal adherence requires incomputable judgements, the situational awareness that the principle of distinction presupposes is not translatable into machine-executable code. If this is the case, autonomous weapons – at least those that target persons – are in violation of IHL and should be prohibited.

Once operations are underway, might meaningful human control include refusal to deploy a weapon system at all, given a war’s illegality?

The call for meaningful human control has been a useful intervention by civil society organizations, including Stop Killer Robots, at the CCW and more broadly, not least because it opens the question of what forms of control over warfighting are meaningful. At a minimum, meaningful human control assumes sufficient time for validation of the legitimacy of targets, an assumption that is antithetical to the aim of acceleration that justifies the automation of weapon systems.

Recent revelations about the use by the Israeli Defense Forces (IDF) of AI-enabled targeting raise new questions about assertions by both the US and Israel that their weapon systems will always be subject to meaningful human control. The general claim for AI-enabled targeting is that it increases accuracy, which under IHL would mean that only those who are combatants and pose an imminent threat are targeted.

But we now know, from reporting in the joint Israeli/Palestinian outlets +972 and Local Call, that the systems in use in Gaza are optimized for the acceleration of target generation, in both the number of targets and the speed with which they are ‘recommended’ to those in command (Abraham 2023, 2024). As then IDF spokesman Daniel Hagari explained in November of 2023, in the use of these systems “the emphasis is on damage, and not on accuracy” (Abraham 2023).

In the context of the Gaza genocide, at the time of this writing 17 young Israelis called up by the Israeli Defense Forces have taken the extraordinary action of refusing to enlist, supported by a broader wave of more than 300 reservists who have already served but now seek support from the refusal movement Yesh Gvul, Hebrew for ‘There is a limit’ (Ziv 2025). While an exception to the rule among Israelis conscripted by the IDF to serve in the Occupied Territories, these actions might be read as a step toward expansion of the frame of meaningful human control.

Dehumanisation at speed: when machines make life or death decisions in Gaza

In this podcast episode of Digital Dehumanisation, our host Hayley speaks with Visualizing Palestine's Nasreen Abd Elal and journalist Meron Rapoport about the use of military AI in the ongoing war.

In a related initiative, in September 2025 retired Navy Captain Jon Duffy published an opinion piece in the US Defense outlet Defense One referring to the US attack on a small Venezuelan vessel in the Caribbean, in international waters, which killed all eleven people on board (Duffy 2025). Declaring this action “killing without process” and a violation of international law, Duffy concludes: “unlawful orders—foreign or domestic—must be disobeyed. To stand silent as the military is misused is not restraint. It is betrayal.”

Expanding the frame of meaningful human control to governance and accountability

Duffy’s call shifts the focus from control over the operations of a particular weapon system to the prior question of how decisions to initiate or engage in violent force are adjudicated in the first instance. In the case of the US, investments in hegemonic militarism, economic special interests, and associated strategies of geopolitical positioning have historically overridden democratic expression. While the frame of meaningful human control has operated as a powerful tool in the context of the debate over AWS, we need to ask more fundamental questions regarding the legitimacy of any instance of the initiation of force, the premise that warfighting can be rationally controlled, and the realities of impunity in warfighting. Put another way, we need to expand the frame of meaningful human control from the operations of weapon systems to wider questions of democratic governance and legal accountability.

In 1973, over the veto of then President Richard Nixon, the US Congress passed the War Powers Resolution, a measure to limit the mandate of the President to commit US forces to armed conflict without Congressional consent. Critical reviews of the ensuing history of US military operations question the resolution’s efficacy in curbing the unilateral powers of the US President or restraining US military expansion, demonstrated most recently in Donald Trump’s decision to order strikes on Iran in June of 2025 (Cavaliere 2025, Grumbach and Lee 2025). This expansion of Presidential powers has arguably been exacerbated by subsequent Authorizations for the Use of Military force. The “unhappy legal history” of the War Powers Resolution (Dudziak 2023) makes clear that while further Congressional efforts to assert democratic control over the initiation of war are necessary, they are not sufficient.

More fundamental to the future of US foreign policy and the security of all of those affected by it is a redirection of US ideology and resources away from military supremacy and arms industry investment, toward new forms of sustained, creative, and good faith diplomacy. Along with multilateral institutions capable of enforcing legal accountability in the conduct of war, meaningful democratic control of the use of force requires accountability for war’s initiation and the dismantling of structures of militarism that are invested in war’s perpetuation.

In Frames of War, Judith Butler observes that the myth of precision, of “a ‘clean’ war whose destruction has perfect aim” (2010: xviii), is part of the apparatus that holds militarism in place. In The Force of Non-Violence, Butler embraces the proposition that a commitment to nonviolence requires thinking beyond an instrumentalist framework, asking “what new possibilities for ethical and political critical thought result from that opening?” (2020: 19).

Following Butler, my concerns center on the question of how prevailing legal and normative debates over the future of war are framed in ways that place more radical approaches to demilitarization off the table.

Arms control and disarmament are essential multilateral frameworks within which to mitigate the worst abuses of armed conflict, and the call for meaningful human control in the context of AWS is vital to the project of limiting warfighting’s further automation.

At the same time, we need to recognize that a technopolitical imaginary of military operations governed by reason and control is part of the problem, not the solution, to relations based on military dominance. AWS are the logical extension of military doctrine that posits further automation of war as the necessary response to warfighting’s inevitable acceleration. The result is a self- justifying arms race that benefits those whose returns rely on ever-expanding investments in militarism. The restoration of meaningful control to such a machine requires moving outside of the frame that sustains warfighting’s self-justifying logics, enabling greater investments in diplomacy and new forms of accountability to democratic processes, in which those in whose names war is perpetuated have the governing voice.

About the author

Lucy Suchman is Professor Emerita at Lancaster University in the UK and a member of ICRAC. She was previously a Principal Scientist at Xerox’s Palo Alto Research Center (PARC), where she spent twenty years as a researcher. Her current research extends her longstanding critical engagement with the fields of artificial intelligence and human-computer interaction to the domain of contemporary militarism.

n.b. A longer version of this piece has been published in The Realities of Autonomous Weapons. Bristol, UK: Bristol University Press. Available at https://doi.org/10.51952/9781529237191.ch008.

References

Abraham, Yuval. 2023. ‘“A mass assassination factory”: inside Israel’s calculated bombing of Gaza’, + 972 Magazine, 30 November. https://www.972mag.com/mass-assassination-factory-israel-calculated-bombing-gaza/

Abraham, Yuval. 2024. ‘“Lavender”: The AI machine directing Israel’s bombing spree in Gaza’, + 972 Magazine 3 April. https://www.972mag.com/lavender-ai-israeli-army-gaza/

Asaro, Peter. 2019 ‘Algorithms of Violence: Critical Social Perspectives on Autonomous Weapons,” Special Issue on Algorithms, Social Research, 86(2), Summer 2019, pp. 537-555.

Butler, Judith. 2010. Frames of War: When is Life Grievable? London and Brooklyn: Verso.

Butler, Judith. 2020. The Force of Non-Violence. London and New York: Verso.

Cavaliere, Melissa A., 2025. The War Powers Resolution: A Failed Check on Executive Power and a Contributor to U.S. Military Expansion. Doctoral Dissertations and Projects. 6936. https://digitalcommons.liberty.edu/doctoral/6936

Dudziak, Mary. 2023. The Unhappy Legal History of the War Powers Resolution. Modern American History. 6(2):270-273.

Duffy, Jon. 2025. ‘A killing at sea marks America’s descent into lawless power’, Defense One, September 8. https://www.defenseone.com/ideas/2025/09/killing-sea-americas-descent-lawless-power/407949/?oref=defense_one_breaking_nl

Grumbach, Gary and Carol E. Lee. 2025. Presidents' ordering military action without Congress' approval has become routine. Here's why. NBC News, June 22. https://www.nbcnews.com/politics/white-house/presidents-ordering-military-action-congress-approval-become-routine-rcna214379

International Committee of the Red Cross. 2018. “Towards Limits on Autonomy in Weapons Systems.” April 9. https://www.icrc.org/en/document/towards-limits-autonomous-weapons

Ornstein, Severo, Smith, Brian Cantwell, and Suchman, Lucy. 1984. Strategic Computing. Bulletin of the Atomic Scientists, 40 (10), 11-15. https://www.tandfonline.com/doi/abs/10.1080/00963402.1984.11459292

Parnas, David L. 1985. Software aspects of strategic defense systems. Communications of the ACM, 28(12), 1326-1335. https://dl.acm.org/doi/pdf/10.1145/214956.214961

Suchman, Lucy. 2016. Situational awareness and adherence to the principle of distinction as a necessary condition for lawful autonomy. In R. Geis (Ed.), Lethal Autonomous Weapon Systems (pp. 273-283). Berlin: Federal Foreign Office.

Ziv, Oren. 2025. Israeli army refusers defy harsher backlash to protest genocide + 972 Magazine, September 8. https://www.972mag.com/israeli-army-refusers-gaza-genocide/

Dear Franky, I really struggle to see the link between your comment and the topic of this article. While I agree with some of your points, I do not really think that those arguments deal in any way with MHC or something which is at least connected to this topic. Last but not least, insulting people under scientific contributions is really not the way to go. Cheers from Germany :)

I really wonder— if you Americans know how pathetic you all look, to the outside world. You fight amongst yourselves.. Democrats V Republicans, black vs. white, religion against religion, actually you all fight over every single topic. From an outside perspective, you're like the bullies in the sand pit.. You think you should be number one, with every other country below you but never equal to you right? What're your saying?.. God bless America and no one else Right? You create the weapons, the war and interfere in every other country on the planet. “You're the eternal agitators”.. And not since Trump but since “ALWAYS”. It's clear anyway that no president is in charge. There is something much more sinister going on behind the scenes. All that money going to defence budgets and it should be providing every damn citizen— with Free healthcare, number one priority today.. Food in your stomach, number two.. No more of this bull shit, war mongering, money drainers. You want all your kids 18+ sent overseas to these wars? Dem or Rep— The only time your sons and daughters should be getting ready for war, is if you need to fight an invasion on your own soil.. And that could definitely happen 99% of the world's problems are caused by the American oligarchy/elite class. You all should be embarrassed to call yourself American, the world is really starting to hate you all. And for you Democrats.. the way you carry on like little children, sore little losers of the election.. And you guys fucking sucked— when you held all the seats and all the power.. Fucking disgusting all of you.

Get it together or there will be suffering on this planet like never before and you will be to blame..

Fuck make America great again, how about make the world great again— 2000 years of peace, is what you all should be fighting for.. Abolish the insurance companies.. Stop letting corporations and billionaires run the show..

Stand up for yourself for fuck sake.

Much love to the peacekeepers..

From a New Zealand Grandmother

(with free healthcare and a 4% unemployment rate) You could have that too, if you work together.. Dumb ass’s.