Listen to the voiceover:

Earlier this summer, Francesca Albanese, the UN Special Rapporteur on the situation of human rights in the Palestinian territories occupied since 1967, released her report 'From economy of occupation to economy of genocide'.

Here’s a summary:

Throughout the report, Albanese describes the Israeli Army (IDF)’s use of technology to facilitate the killing and displacement of Palestinians, writing:

“Repression of Palestinians has become progressively automated, with tech companies providing dual-use infrastructure to integrate mass data collection and surveillance, while profiting from the unique testing ground for military technology offered by the occupied Palestinian territory.”

The Special Rapporteur makes specific reference to automated decision support system like the Lavender and the Gospel, which support the IDF’s strategic military decision-making. They are trained on the vast amount of information that the Israeli Government holds on Palestinians and uses artificial intelligence to speed up the creation of “kill lists” – people and places to target. Albanese explains:

“The Israeli military has developed artificial intelligence systems, such as ‘Lavender’, ‘Gospel’ and ‘Where’s Daddy’ to process data and generate lists of targets, reshaping modern warfare and illustrating the dual-use nature of artificial intelligence.”

The Lavender system, which was first reported on by +972 Magazine with support from Meron Rapoport, is particularly egregious. It is used to create lists of people to target, scoring individuals based on data like social media connections and how often they change their addresses. If the resulting score surpasses a certain threshold, then the person would be added to a target list. While IDF personnel could review the decisions churned out by Lavender, reports show they did not. +972 magazine, reported “sweeping approval for officers to adopt Lavender’s kill lists, with no requirement to thoroughly check why the machine made those choices or to examine the raw intelligence data on which they were based”.

We define Digital dehumanisation as a process where humans are reduced to data, which is then used to make decisions and or take actions that negatively affect their lives. Israel’s use of technologies like “Lavender” and “Gospel” are clear and extreme examples of this.

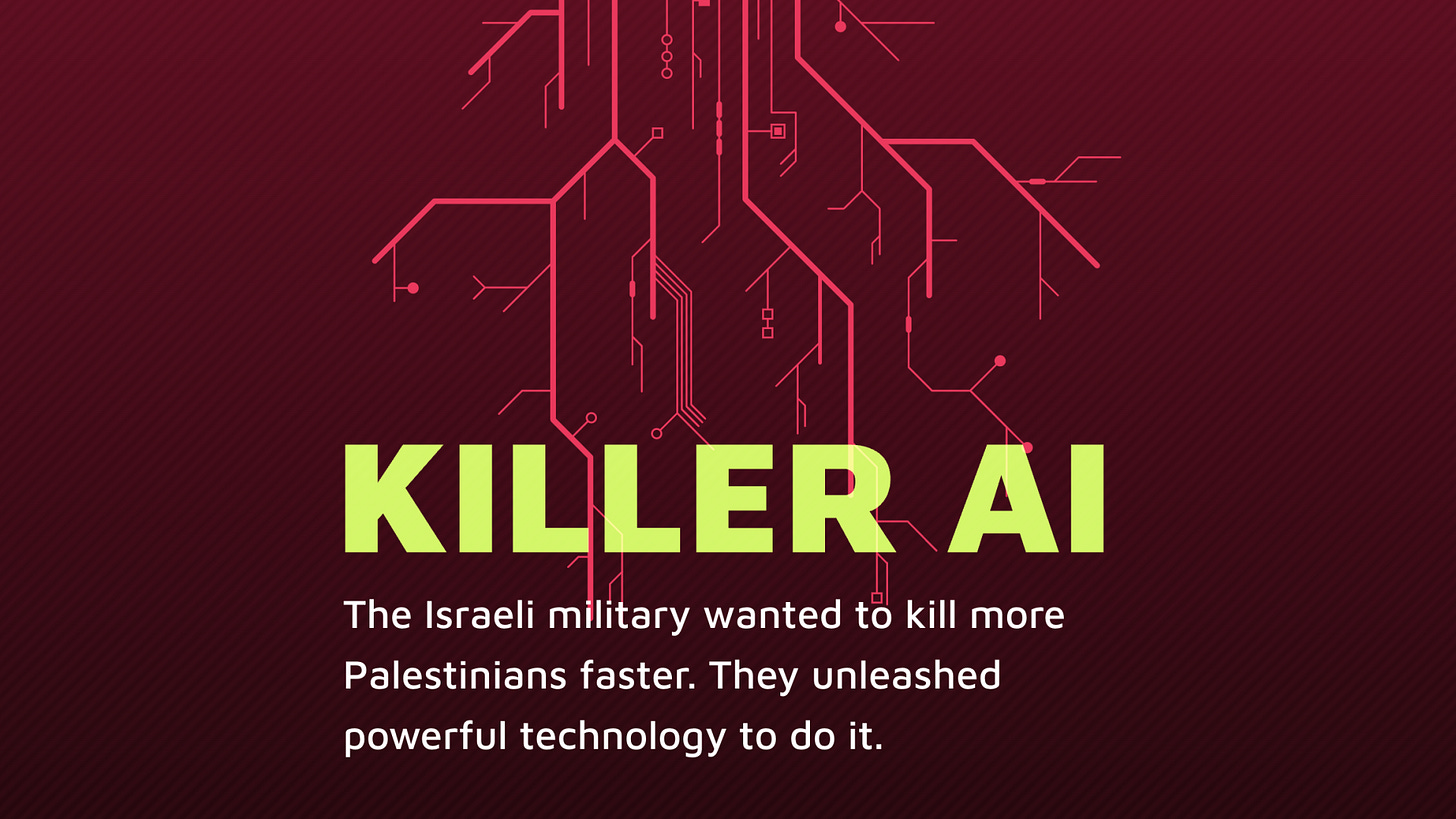

In response to the news that the Israeli Army was using the Lavender decision support system to generate, and subsequently strike, human targets in Gaza, Visualizing Palestine created their Stop KillerAI story. Visualizing Palestine is an organisation which uses data and research to visually communicate Palestinian experiences to provoke narrative change. Their Stop Killer AI story demonstrates how the mass surveillance apparatus that touches every aspect of Palestinian life had been weaponised in a new, and especially lethal way. The scroll-story merges interactive graphics and research to create a compelling online experience that demystifies how Lavender operates and how the Israeli army used it to enable and justify the mass killing of Palestinians civilians in Gaza.

We were privileged to get to speak to two people so close to the issue about the history of oppressive technologies in Palestine, the current AI-powered implications, and future resistance.